or Human Factors: Enhancing Security through People-Centric Approach

As a seasoned Cyber Security Consultant, I've come to realise a fundamental truth that often gets glossed over in our industry. While technology plays a crucial role in safeguarding our digital assets, it's the human factor that truly determines the success or failure of our security measures.

Or to put it bluntly… Security is a people problem.

It's the actions of individuals that write the malicious code or contribute to the exploitation of vulnerabilities, the inadvertent disclosure of sensitive information and the occurrence of errors that compromise our systems. Therefore, it is imperative to acknowledge and address the impact of human behaviour on the overall security landscape.

Now, let's shift our perspective to the defenders' side of the fence and ask ourselves: Why do people often engage in what we perceive as "wrong" actions? Moreover, why is it that when we inquire about the same risk from ten different people, we receive ten distinct (and often contradictory) answers?

Psychology of Risk: Understanding Human Behaviour in Security

One aspect that cannot be overlooked in the realm of cybersecurity is the fascinating field of the Psychology of Risk. Extensive research has been conducted into the intricate relationship between risk perception and human behaviour, shedding light on crucial factors that influence our decision-making processes. By exploring this field, we can gain valuable insights into why different individuals perceive risks differently and how cognitive biases shape our responses.

Consider this: Why do some people see the glass as half full while others see it as half empty?

The Psychology of Risk asks questions like these, delving into the underlying factors that influence our perceptions. It explores how cognitive biases, shaped by our personal histories, cultural backgrounds, and the media we consume, impact our decision-making processes.

If you're eager to delve into this subject, a quick Google search for "Psychology of Risk" will open the door to a vast array of resources. To get started, I recommend watching this easy-to-digest introduction video with a technology flavour (https://www.youtube.com/watch?v=InVAztkJtFc).

While not all research in this field may agree on the precise mechanisms and explanations, the outcomes consistently highlight an essential takeaway:

People are generally not great at accurately assessing risks.

This holds true, especially within an organisational context, where the personal component and individual thinking cannot be entirely eliminated. Even when two individuals are exposed to the same risk factors, they are likely to arrive at entirely different conclusions.

As professionals in the cybersecurity domain, it is crucial to factor in these findings in all our dealings with people. Recognise that risk perception varies and that people's understanding of risks may not align with our own. To effectively communicate security measures and strategies, it is essential to present facts in a manner that resonates with the audience's level of comprehension. Tailoring our approach to suit the appropriate level of understanding ensures that our message is received and internalised.

It's also important to understand that no single product (or group of products) can provide absolute security. Recognising that security measures generally trade functionality and/or convenience against security, this leads to an interesting conclusion...

If a security control makes it hard for people to do their job, they will find ways around it which will generally be more insecure than not having the control there in the first place.

This leads us to a somewhat frustrating realisation: focusing solely on products and tools is insufficient.

So, where should we begin?

We need to start with people. Education is key, but it must be approached with empathy and without resorting to naming and shaming. We need to create an environment where individuals feel comfortable speaking up about potential security concerns without fear of retribution. It's crucial to foster critical thinking skills, enabling employees to understand cause-and-effect relationships and make informed decisions. Encourage your team to put themselves in the shoes of an attacker and think about how they could impact the system or business (an exercise that can be eye-opening!).

Integrating security into job descriptions is an effective way to embed it within an organisation's culture. Clear and simple policies are vital, as complex and convoluted rules are more likely to be disregarded. It's also important to acknowledge that not all policies, procedures, or technologies fit every scenario. Flexibility is key, allowing for customised approaches that align with different business needs.

To sum it all up...

We must remember that people are generally not intentionally doing the wrong thing; they are simply human. People will act in ways that are most convenient for them and often lack awareness of their own blind spots. To promote a security-conscious culture, we can employ tactics such as increasing the perceived benefits of cautious behaviour and offering rewards, while simultaneously reducing the perceived overheads associated with complying with security measures. By placing people at the forefront of our security strategies, it’s possible to create an environment where both technological controls and human actions work in harmony to protect our valuable assets.

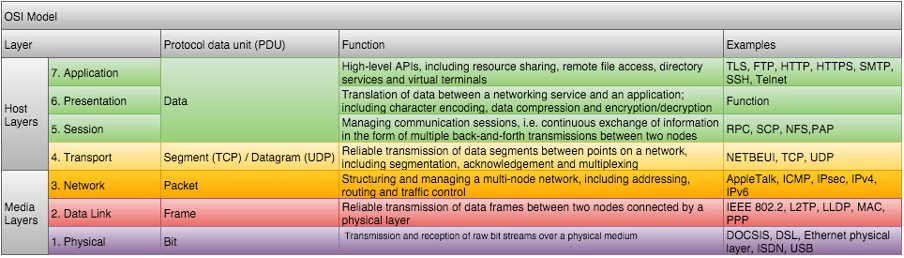

* Note on the title: The reference to layer 8 comes from the 7-layer OSI Model and refers to the human element/user in any IT system, where "Layer 8" refers to the person using the system.

Open Systems Interconnection Basic Reference Model - ISO/IEC 7498-1:1994 - https://www.iso.org/standard/20269.html

I was tempted to use PEBKAC (Problem Exists Between Keyboard and Chair) in the title rather than "Layer 8", however, the term has some fairly negative connotations and has been widely used as a joke or putdown.

Look out for more blog posts coming soon exploring the requirements and processes for developing and implementing Governance, Risk management and Compliance capabilities within your environment... And as always please reach out to us directly to discuss these topics further.

May 18, 2023